Borderline Regression Analysis in Qpercom Borderline regression analysis (BRA) is an absolute, examinee-centered standard setting method that is widely used to standard set OSCE exams Yousuf, Violato, and Zuberi (2015). Candidates are awarded a “global score” for a station in a circuit, based on the examiner’s professional judgment of their ability. BRA is one of the methods used by Qpercom’s software for retrospective standard setting of the OSCE exam (Meskell et al., 2015; Schoonheim-Klein et al., 2009).

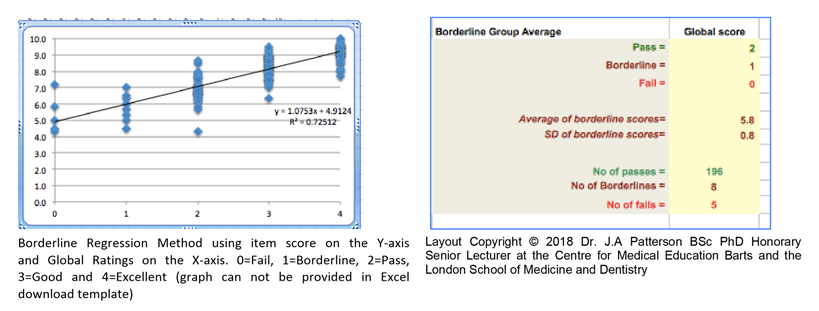

Borderline Regression Method using item score on the Y-axis and Global Ratings on the X-axis. 0=Fail, 1=Borderline, 2=Pass, 3=Good and 4=Excellent (graph can not be provided in Excel download template)

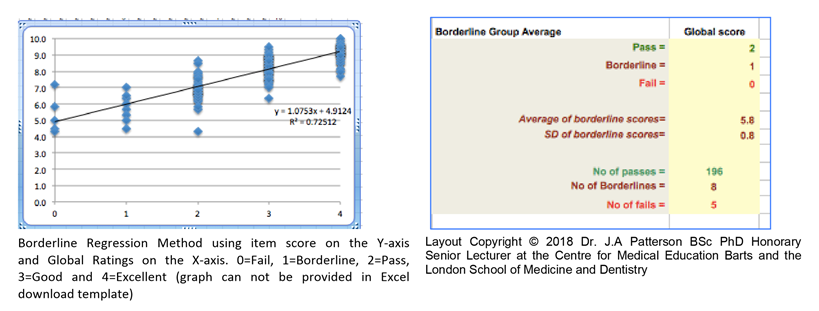

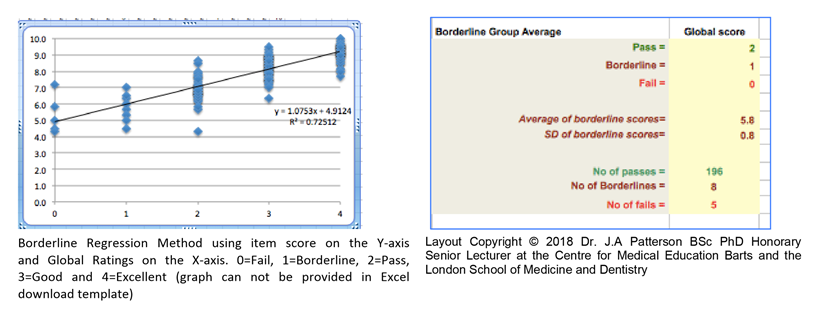

In Qpercom’s OSCE Management Information System/Observe, three different types of Borderline Regression Analysis are available 1. Borderline Group Average 2. Borderline Regression (forecast) Method 1 and Borderline Regression (forecast) Method 2. Borderline Group Average takes the average of all examinees that scored ‘Borderline’ (1) and is considered as unreliable in case only a few (< 10) candidates are marked as borderline. In the latter case Method 1 or 2 are recommended as they take into account all of the Global Rating Scores and not just the Borderline scores (Dwyer et al., 2016).

The difference between Regression Method 1 and Regression Method 2 is the type of Global Rating Score (GRS) that is used. In Method 1 only 1 Borderline score (represented by 1) is used to mark candidates whereas in Method 2 scores for Borderline Fail (represented by 1) and Borderline Pass (represented by 2) are utilized. The cut-score in the latter case will be higher (6.53) than in Method 1 (5.99) as it is right in between Borderline Pass and Borderline Fail (Homer, Pell, Fuller, & Patterson, 2016).

Standard setting procedures can be categorized as either exam-centered, in which the content of the test is reviewed by the expert judges (e.g., Angoff method) or examinee-centered, where expert decisions are based on the actual performance of the examinees like in the BRM of Qpercom’s software. The examiner rates the performance at each station by completing a checklist and a global rating scale. The checklist marks from all examinees at each station are then regressed on the attributed global rating scores, providing a linear equation. The global score representing borderline performance (e.g., 1 or 1 and 2 on the global performance rating scale) is substituted into the equation to predict the pass-fail cut-score for the checklist marks using the Forecast method using the Intercept and 1 or 1.5 times the Slope of the Regression line.

References

Dwyer, T., Wright, S., Kulasegaram, K. M., Theodoropoulos, J., Chahal, J., Wasserstein, D., . . . OgilvieHarris, D. (2016). How to set the bar in competency-based medical education: standard setting after an Objective Structured Clinical Examination (OSCE). BMC Med Educ, 16, 1. doi:10.1186/s12909-015-0506-z

Homer, M., Pell, G., Fuller, R., & Patterson, J. (2016). Quantifying error in OSCE standard setting for varying cohort sizes: A resampling approach to measuring assessment quality. Med Teach, 38(2), 181-188. doi:10.3109/0142159X.2015.1029898

Meskell, P., Burke, E., Kropmans, T. J., Byrne, E., Setyonugroho, W., & Kennedy, K. M. (2015). Back to the future: An online OSCE Management Information System for nursing OSCEs. Nurse Educ Today, 35(11), 1091-1096. doi:10.1016/j.nedt.2015.06.010

Schoonheim-Klein, M., Muijtjens, A., Habets, L., Manogue, M., van der Vleuten, C., & van der Velden, U. (2009). Who will pass the dental OSCE? Comparison of the Angoff and the borderline regression standard setting methods. Eur J Dent Educ, 13(3), 162-171. doi:10.1111/j.16000579.2008.00568.x

Yousuf, N., Violato, C., & Zuberi, R. W. (2015). Standard Setting Methods for Pass/Fail Decisions on High-Stakes Objective Structured Clinical Examinations: A Validity Study. Teach Learn Med, 27(3), 280-291. doi:10.1080/10401334.2015.1044749

For more information on Entrust email info@qpercom.com

Click here to request a demo.